OpenResearcher: Unleashing AI for Accelerated Scientific Research

Summary: The rapid growth of scientific literature presents significant challenges for researchers striving to stay current with the latest advancements in their fields while also exploring new areas. OpenResearcher, an innovative platform, addresses this issue by leveraging Artificial Intelligence (AI) techniques to expedite the research process and answer diverse questions posed by researchers. Built on the foundation of Retrieval-Augmented Generation (RAG), OpenResearcher seamlessly integrates Large Language Models (LLMs) with up-to-date, domain-specific knowledge.

In addition to its core capabilities, OpenResearcher is equipped with a variety of tools designed to understand researchers' queries, search the scientific literature, filter retrieved information, provide accurate and comprehensive answers, and even refine these answers through self-improvement mechanisms. By flexibly utilizing these tools, OpenResearcher strikes a balance between efficiency and effectiveness. As a result, it empowers researchers to save time, uncover new insights, and drive scientific breakthroughs.

Strategy Game-Playing with Size-Constrained State Abstraction

Summary: Playing strategy games presents a significant challenge for artificial intelligence (AI) due to the large search space created by a diverse set of game components. Recent advancements have seen the application of state abstraction techniques to search-based game AI, leading to substantial performance improvements. These techniques work by reducing the search space, such as by aggregating similar states. However, a major obstacle to their widespread use is the difficulty in evaluating the quality of an abstraction. To avoid biasing the search towards a local optimum, previous approaches often abandon the abstraction midway through the search process, introducing a hyper-parameter that determines when to discard the current state abstraction.

In this work, a novel approach called size-constrained state abstraction (SCSA) is proposed. SCSA limits the maximum number of nodes that can be grouped together, thereby addressing the challenges of traditional state abstractions. Unlike previous methods, SCSA does not require abandoning the abstraction during the search. Empirical results from tests on three different strategy games demonstrate that the SCSA agent outperforms earlier methods, delivering robust performance across various games.

The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery

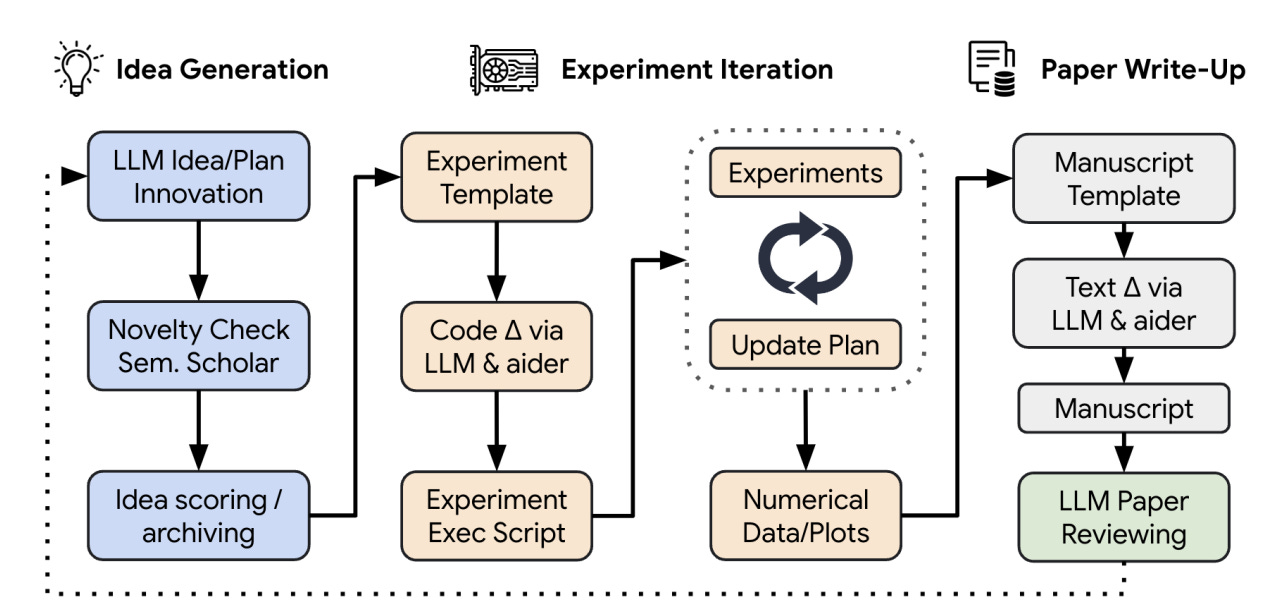

Summary: One of the grand challenges of artificial general intelligence is developing agents capable of conducting scientific research and discovering new knowledge independently. While frontier models have already been employed as aids to human scientists for tasks such as brainstorming ideas, writing code, and making predictions, they still only participate in a small portion of the scientific process. This paper introduces the first comprehensive framework for fully automatic scientific discovery, enabling advanced large language models to perform research autonomously and communicate their findings.

The framework, referred to as The AI Scientist, is designed to generate novel research ideas, write code, execute experiments, visualize results, and describe its findings by composing a complete scientific paper. It even simulates a review process for evaluating the research. This iterative process can be repeated indefinitely, functioning similarly to the human scientific community.

The AI Scientist's versatility is demonstrated by its application to three distinct subfields of machine learning: diffusion modeling, transformer-based language modeling, and learning dynamics. Each idea generated by The AI Scientist is implemented and developed into a full paper at a cost of less than $15 per paper. To assess the quality of these papers, an automated reviewer is designed and validated, achieving near-human performance in evaluating paper scores. The results show that The AI Scientist can produce papers that meet or exceed the acceptance threshold of a top machine learning conference, as judged by the automated reviewer.

CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer

Summary: Introducing CogVideoX, a large-scale diffusion transformer model specifically designed for generating videos from text prompts. To efficiently model video data, CogVideoX employs a 3D Variational Autoencoder (VAE) that compresses videos across both spatial and temporal dimensions. This approach allows for more manageable and efficient processing of complex video data.

To enhance the alignment between text and video, CogVideoX incorporates an expert transformer equipped with expert adaptive LayerNorm, enabling deep fusion between these two modalities. The model is trained using a progressive training technique, which makes CogVideoX particularly adept at producing coherent, long-duration videos that feature significant motion.

Additionally, CogVideoX benefits from a robust text-video data processing pipeline that includes advanced data preprocessing strategies and a sophisticated video captioning method. These elements significantly contribute to the model's enhanced performance, resulting in improved video generation quality and better semantic alignment between the text prompts and the generated videos.

Decoder Pre-Training with only Text for Scene Text Recognition

Summary: Scene text recognition (STR) pre-training methods have made significant strides, largely due to the use of synthetic datasets. However, the gap between synthetic and real images creates challenges in acquiring feature representations that align well with real-world scenes, thereby limiting the performance of these methods. Recognizing that vision-language models like CLIP, which are pre-trained on extensive real image-text pairs, effectively align images and text within a unified embedding space, suggests the potential to derive real image representations from text alone.

Building on this premise, a novel method named Decoder Pre-training with only Text for STR (DPTR) is introduced. DPTR utilizes text embeddings generated by the CLIP text encoder as pseudo visual embeddings and leverages them for pre-training the decoder. To further enhance this approach, an Offline Randomized Perturbation (ORP) strategy is introduced. ORP enriches the diversity of text embeddings by incorporating natural image embeddings extracted from the CLIP image encoder, effectively guiding the decoder to capture the potential representations of real images.