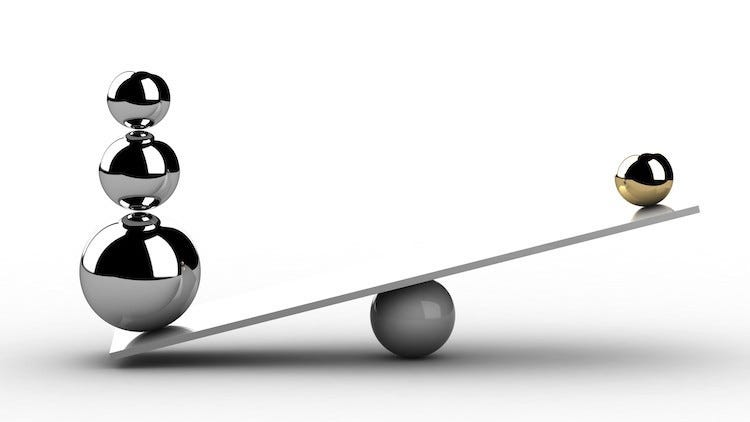

Class Imbalance Problem

Image Credit: https://towardsdatascience.com/yet-another-twitter-sentiment-analysis-part-1-tackling-class-imbalance-4d7a7f717d44

What is Class Imbalance problem?

Class Imbalance is a problem which occurs when there is a major imbalance between the number of data points in minority and majority classes.

Let’s consider an example: If your dateset has 100 rows of data, with 95 data points labelled “Apple” and 5 labelled “Bananas”, the classification model developed on such dateset would have a very high F score but it always classifies everything as “Apples”. This is problematic because it causes sub-optimal classification performance.

How does Class Imbalance Issue arise in data?

There are two major causes for Class imbalance: -

The data is naturally imbalanced

The minority class data is too expensive to obtain which results in the imbalance in the dateset.

What are the possible remedies to create uniform class distribution from Imbalanced data?

Changing Class distributions by re-sampling techniques.

Feature selection in the feature level

Ensemble approach for final classification

Manipulate classifiers internally.

Changing Class distributions

What are the 3 basic techniques in changing Class distributions?

Heuristic & non-Heuristic Under-Sampling

Heuristic & non-Heuristic Over-Sampling

Advanced Sampling

What is Under-Sampling?

Basic method is Random Under-Sampling to create uniform Class distribution. It is performed through random elimination of majority class examples. This leads to losing potential data which could have been important for Classifiers.

There are 2 types of Noise Hypothesis to create a uniform Class Distribution: -

One considers the examples near classification boundary of 2 classes as NOISE

The examples which contains neighbors with different labels are considered NOISE.

What is Bayesian Risk?

If the densities of our classes have a small Overlapping, the algorithm picks out examples near the boundary between classes. This indicates a high Bayesian risk.

The Condensed Nearest Neighbor Rule (CNN) can be applied only when the density of classes have a small overlapping.

What is Over Sampling

It is a non-heuristic approach which randomly replicates minority class examples to achieve uniform Class Distribution. It increases the likelihood of Over-fitting. Over Sampling makes the learning process longer.

SMOTE Algorithm (SMOTE stands for Synthetic Minority Oversampling Technique)

SMOTE generates synthetic minority samples to create uniform class distributions. The main idea of SMOTE is forming new minority classes by interpolating between minority classes that lie together. By Interpolating instead of replication, SMOTE solves the problem of Over-Fitting by spreading the decision boundaries of the minority class.

What is Borderline_SMOTE?

To correctly classify examples which lie in the boundary of minority and majority class, Borderline_SMOTE was introduced. Borderline_SMOTE avoids Over Fitting by only replicating minority classes which lie in the borderline of minority and majority class.

What is Advanced Sampling?

Advanced sampling includes re-sampling based on the results of preliminary classifications. Boosting is an iterative algorithm which places different weights on training distributions for each iteration. It efficiently alters the distributions of training data. This is an Advanced Sampling technique. Overall, when the dateset is severely skewed, under-sampling & Over-sampling techniques are combined to improve the generalization of the classification learner.

To Be Continued in the Series-2 of Class Imbalance article.

Manipulating Classifiers Internally

Feature Selection in Imbalanced data

Cost Sensitive Learning (Cost -Matrix)

One Class Learning

Evaluation Metrics of Classification algorithms