Randomized Autoregressive Visual Generation

Summary: The paper presents Randomized AutoRegressive modeling (RAR), an innovative approach for visual generation that achieves state-of-the-art results while preserving full compatibility with language modeling frameworks. The authors propose a simple yet effective modification to the traditional autoregressive training process. During training, the input sequence, normally arranged in raster order, is randomly permuted into different factorization orders with a probability ‘rrr‘. This probability starts at 1 and gradually decreases to 0 over the training period, allowing the model to maximize the expected likelihood over all possible orders. This strategy enhances the model's ability to use bidirectional context, significantly improving image generation performance.

CALE: Continuous Arcade Learning Environment

Summary: The Arcade Learning Environment (ALE), introduced by Bellemare et al. in 2013, serves as a pivotal benchmark for evaluating the performance of reinforcement learning (RL) agents. ALE features a diverse collection of Atari 2600 games, where agents learn autonomously by playing and making decisions based on high-dimensional pixel inputs. The significance of ALE lies in its ability to test agents on generality, capability, and autonomy, offering over 100 games that challenge various aspects of agent learning.

The benchmark gained prominence when Mnih et al. (2015) demonstrated that combining deep learning with RL could achieve super-human performance on these games, marking a milestone in Deep Reinforcement Learning. Over time, ALE has evolved with enhancements like stochastic transitions, new game modes, and multi-player support. Despite these updates, ALE continues to be a vital platform for testing and developing generally capable RL agents across diverse and difficult scenarios.

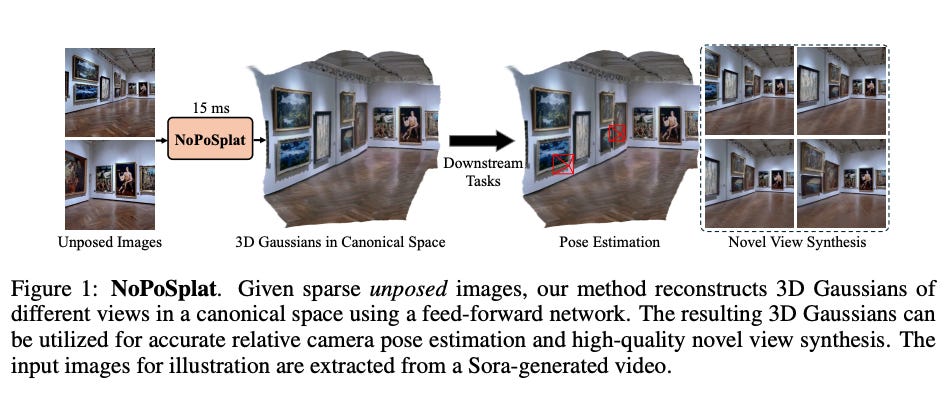

No Pose, No Problem: Surprisingly Simple 3D Gaussian Splats from Sparse Unposed Images

Summary: NoPoSplat is a novel feed-forward model designed for reconstructing 3D scenes, parameterized by 3D Gaussians, from unposed sparse multi-view images. Unlike traditional methods, NoPoSplat operates without the need for precise pose inputs, anchoring one input view’s camera coordinates as a canonical space. This allows the model to predict Gaussian primitives across all views without requiring coordinate transformations, reducing errors tied to per-frame Gaussian adjustments and pose estimation. The model addresses scale ambiguity by converting camera intrinsics into a token embedding and incorporating it with image tokens, improving scale prediction.

Trained using only photometric loss, NoPoSplat enables real-time 3D Gaussian reconstruction during inference. It also excels in novel view synthesis and pose estimation tasks. A two-stage, coarse-to-fine pipeline is employed for pose estimation, achieving substantial performance gains over existing methods, even without ground truth depth. Overall, NoPoSplat advances pose-free 3D reconstruction and demonstrates real-world utility.

SelfCodeAlign: Self-Alignment for Code Generation

Summary: SelfCodeAlign introduces a novel, fully transparent, and permissive approach for aligning code language models (LLMs) without relying on extensive human annotations or distillation. This method enhances instruction-following capabilities of code LLMs through a self-alignment process using the base model for data generation. The pipeline extracts coding concepts from seed snippets to create diverse tasks, samples multiple responses, and validates them using sandboxed test cases. Successfully validated examples are then used for instruction tuning.

Using SelfCodeAlign, the researchers generated a dataset of 74k instruction-response pairs with CodeQwen1.5-7B, leading to a model that achieved a 67.1 pass@1 on HumanEval+, outperforming larger models like CodeLlama-70B-Instruct. The approach shows consistent improvements over previous methods like OctoPack and proves effective across various LLM sizes. Additionally, the method outperforms distillation-based techniques from GPT-4o and GPT-3.5. SelfCodeAlign also contributed to the development of StarCoder2-Instruct, a state-of-the-art, self-aligned, and open-source code LLM.

FlowLLM: Flow Matching for Material Generation with Large Language Models as Base Distributions

Summary: Material discovery holds the promise of transforming fields such as carbon capture, renewable energy, and electronics. The vastness of chemical space, however, makes comprehensive experimental exploration infeasible. In response to this challenge, the paper introduces FlowLLM, an innovative generative model that integrates large language models (LLMs) with Riemannian flow matching (RFM) to generate novel crystalline materials. FlowLLM begins by fine-tuning an LLM to model meta-stable crystals in a textual format, providing a strong base distribution. These samples are then converted into a graph representation, where the RFM model refines atomic coordinates and lattice parameters.

FlowLLM achieves over three times the generation rate of stable materials compared to existing methods and shows a marked increase in producing stable, unique, and novel crystals. Furthermore, the crystals generated are closer to their relaxed states, significantly reducing computational costs associated with post-generation relaxation. This represents a major advance in efficient material generation.