Top AI Papers - November, 2024

Optimizing Multispectral Object Detection: A Bag of Tricks and Comprehensive Benchmarks

Summary: Multispectral object detection, combining RGB and TIR (thermal infrared) modalities, is recognized as a complex task due to challenges like feature extraction, robust fusion strategies, and issues such as spectral discrepancies, spatial misalignment, and environmental dependencies between RGB and TIR images. These factors hinder the generalization of detection systems across varied scenarios. While many studies aim to address these limitations, distinguishing the performance gains of multispectral systems from optimization techniques remains challenging. Moreover, the lack of specialized training methods to adapt high-performing single-modality detection models for multispectral tasks and the absence of a standardized benchmark further complicate progress. To address these gaps, a fair and reproducible benchmark is proposed to systematically evaluate training techniques, classify existing multispectral detection methods, assess hyper-parameter sensitivity, and standardize core configurations.

DuMapper: Towards Automatic Verification of Large-Scale POIs with Street Views at Baidu Maps

Summary: As mobile devices become integral to daily life, web mapping services have emerged as indispensable tools. A key component of these services is the point of interest (POI) database, which stores detailed multimodal information about billions of locations, such as shops and banks, directly linked to users' everyday activities. Ensuring the accuracy of such vast POI databases is crucial. Traditionally, companies rely on volunteered geographic information (VGI) platforms, where thousands of crowd-workers and expert mappers verify POIs. However, this approach comes with significant financial costs, often reaching millions annually. To reduce these expenses, Baidu Maps introduced DuMapper, an automated system designed for large-scale POI verification using multimodal street-view data. By leveraging signboard images and geographic coordinates, DuMapper generates low-dimensional vectors, enabling advanced ANN algorithms to efficiently search and verify POIs in the database within milliseconds. This innovation significantly boosts verification throughput, offering a cost-effective and scalable solution.

Scaling nnU-Net for CBCT Segmentation

Summary: This paper presents a customized approach to adapting the nnU-Net framework for multi-structure segmentation on Cone Beam Computed Tomography (CBCT) images, developed as part of the ToothFairy2 Challenge. Building on the nnU-Net ResEnc L model, the method introduces critical adjustments to patch size, network architecture, and data augmentation techniques to address the specific challenges of dental CBCT imaging. The approach achieved remarkable performance, with a mean Dice coefficient of 0.9253 and an HD95 of 18.472 on the test set, resulting in a mean rank of 4.6 and securing first place in the challenge. The publicly available source code fosters further exploration and innovation in the field.

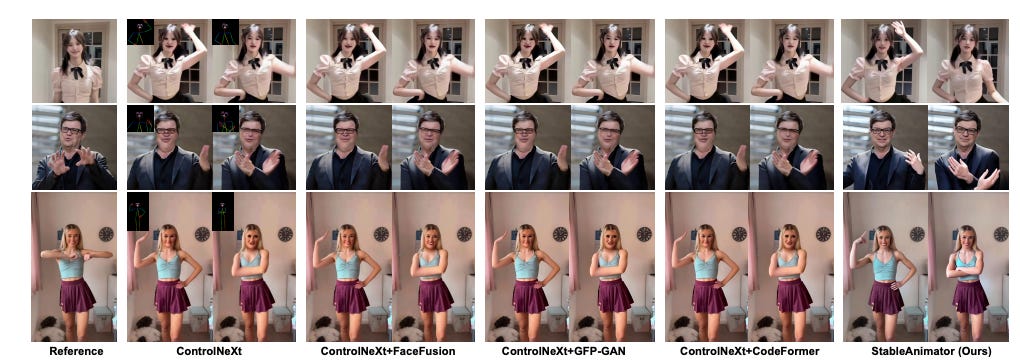

StableAnimator: High-Quality Identity-Preserving Human Image Animation

Summary: Maintaining identity (ID) consistency remains a key challenge for current diffusion models in human image animation. This paper introduces StableAnimator, the first end-to-end video diffusion framework designed to synthesize high-quality videos while preserving ID without requiring post-processing. StableAnimator builds on a video diffusion model with tailored modules for both training and inference, specifically targeting identity consistency. It begins by extracting image and face embeddings using pre-trained extractors, with face embeddings further refined via interaction with image embeddings through a global content-aware Face Encoder. To address interference from temporal layers and ensure ID alignment, the framework incorporates a novel distribution-aware ID Adapter. Additionally, during inference, StableAnimator employs a unique optimization approach based on the Hamilton-Jacobi-Bellman (HJB) equation to enhance face quality. By integrating this optimization into the diffusion denoising process, the model constrains the denoising path, effectively supporting ID preservation. Experiments across multiple benchmarks demonstrate the qualitative and quantitative advantages of StableAnimator in human animation tasks.

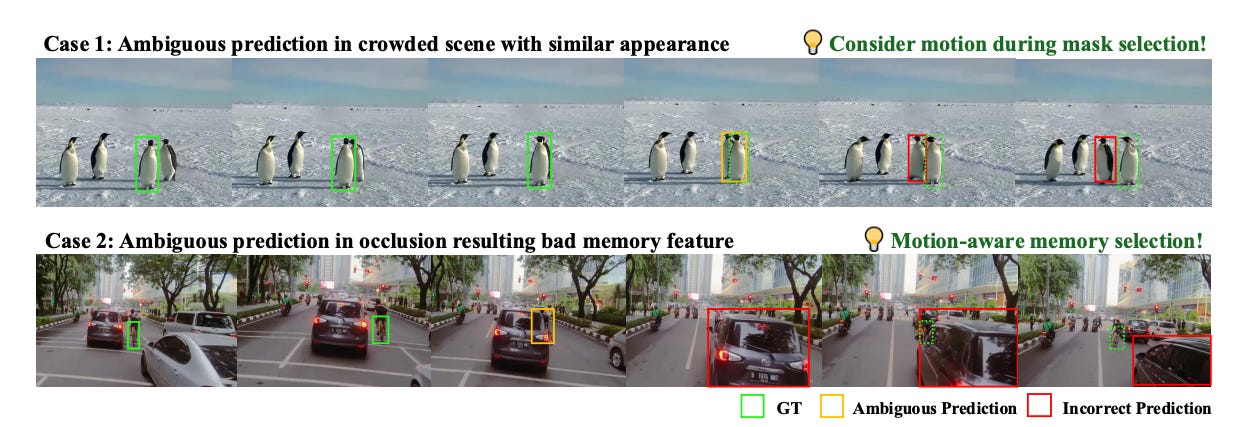

SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory

Summary: The Segment Anything Model 2 (SAM 2) has proven effective for object segmentation but encounters challenges in visual object tracking, particularly in scenarios involving crowded scenes, rapid object movements, or self-occlusions. Its fixed-window memory strategy fails to prioritize high-quality memory selection, leading to error accumulation over video frames. To address these limitations, SAMURAI is introduced as an advanced adaptation of SAM 2 specifically designed for visual object tracking. SAMURAI incorporates a motion-aware memory selection mechanism that utilizes temporal motion cues to predict object trajectories and refine mask selection. This approach enables robust and precise tracking without requiring retraining or fine-tuning. Operating in real time, SAMURAI demonstrates exceptional zero-shot performance on diverse benchmark datasets, achieving a 7.1% gain in AUC on LaSOT and a 3.5% improvement in AO on GOT-10k. Furthermore, SAMURAI achieves results comparable to fully supervised trackers on LaSOT, underscoring its effectiveness in complex tracking scenarios and its suitability for real-world applications in dynamic environments.