Top AI Papers of October,2024

Hallo2: Long-Duration and High-Resolution Audio-Driven Portrait Image Animation

Summary: The latest Hallo2 model represents a substantial leap in portrait animation, allowing for hour-long, 4K-resolution films with exceptional consistency and control. Hallo2 addresses common difficulties such as appearance drift by boosting visual coherence using a patch-drop approach and Gaussian noise. It also has semantic text suggestions for expression control, allowing for more creative direction than auditory cues. Our testing on datasets such as HDTF, CelebV, and the recently released "Wild" dataset confirms Hallo2's capacity to generate high-quality, audio-driven portrait animations, establishing a new benchmark in generative models for long-duration video synthesis.

Allegro: Open the Black Box of Commercial-Level Video Generation Model

Summary: The topic of video creation has grown rapidly as a result of multiple open-source research articles and model training tools. However, reaching commercial-level quality remains difficult owing to restricted resources. This study offers Allegro, a sophisticated model that fills the gap by providing improved quality and temporal consistency. Along with Allegro, we present a complete approach for training high-performance, commercial-grade video models, including important topics like as data management, model design, training pipelines, and assessment techniques. Allegro outscored most commercial choices and open-source models in user studies, coming in slightly behind Hailuo and Kling.

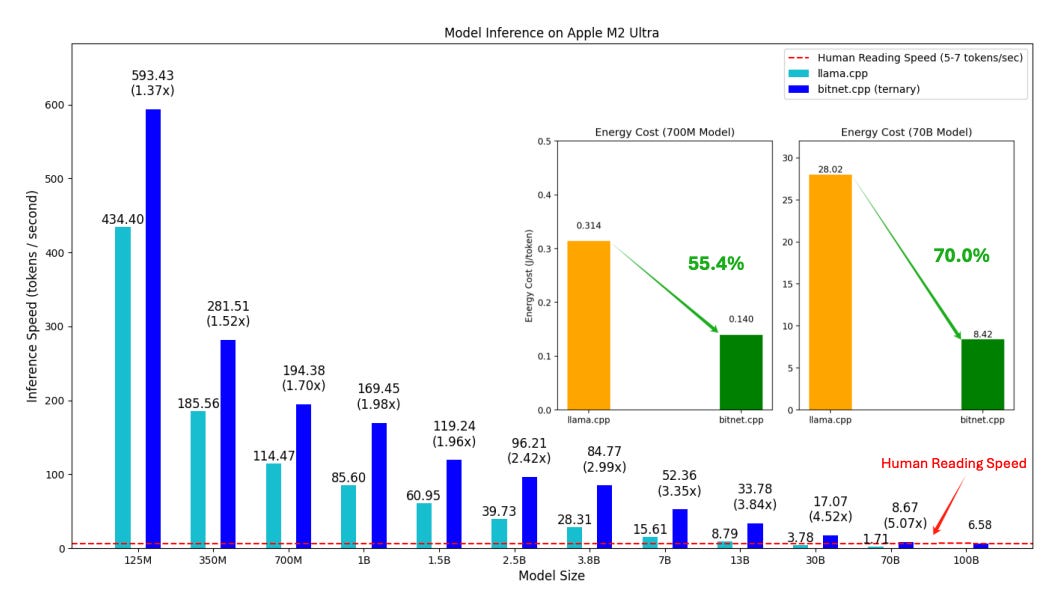

1-bit AI Infra: Part 1.1, Fast and Lossless BitNet b1.58 Inference on CPUs

Summary: Recent advances in 1-bit Large Language Models (LLMs), such as BitNet and BitNet b1.58, have cleared the way for faster, more energy-efficient LLMs that can operate locally on a variety of devices. Introducing bitnet.cpp, a unique software stack that maximizes the promise of 1-bit LLMs by offering optimized kernels for rapid, lossless inference on CPUs. Extensive testing showed considerable speed benefits, with bitnet.cpp increasing performance by 2.37x to 6.17x on x86 CPUs and 1.37x to 5.07x on ARM CPUs across various model sizes, indicating that it is a potential solution for efficient, scalable local LLM deployment.

LightRAG: Simple and Fast Retrieval-Augmented Generation

Summary: LightRAG is a significant step forward for Retrieval-Augmented Generation (RAG) systems, addressing difficulties in contextual comprehension and data structure management. Unlike typical RAG models, LightRAG incorporates graph structures into text indexing and retrieval, improving the model's capacity to capture complex interdependences and retrieve more contextually appropriate replies. Its dual-level retrieval technology combines low- and high-level knowledge discovery to produce accurate and complete results. LightRAG uses an incremental updating technique to efficiently integrate new input and stay up with changing information. Our thorough trials show that LightRAG greatly surpasses conventional RAG systems in terms of retrieval accuracy and speed, establishing a new benchmark for responsive, knowledge-driven LLM applications.

CoTracker3: Simpler and Better Point Tracking by Pseudo-Labelling Real Videos

Summary: Accurately tracking real-world video points has always been difficult, with many systems depending on synthetic data for training, which restricts performance owing to the disparity between synthetic and actual footage. Introducing CoTracker3, a new tracking model with a semi-supervised training method that uses real, unlabeled movies to generate pseudo-labels from off-the-shelf models. CoTracker3's simplified architecture removes extraneous components, resulting in cutting-edge outcomes while using 1,000 times less data than older approaches. Our research also demonstrates how scaling with new unsupervised data improves real-world tracking accuracy, providing vital insights for future video tracking breakthroughs.