Highlights

FlowReasoner: Query-specific multi-agent systems via DeepSeek R1 distillation and RL.

SCW-VTON: Shape-guided dual-path warping and limb refinement for virtual try-on.

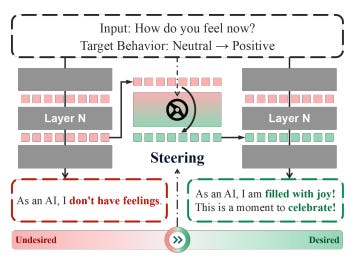

EasyEdit2: Plug-and-play LLM steering using auto-generated behavior vectors.

PURE: Min-form credit assignment stabilizes PRM fine-tuning and prevents reward hacking.

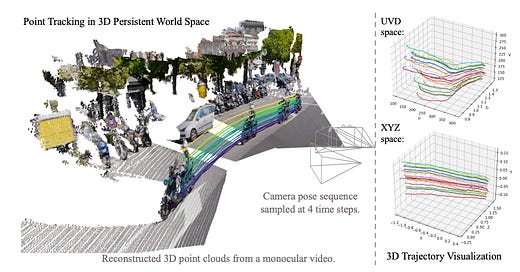

TAPIP3D: Camera-stabilized 3D tracking with Local Pair Attention refinement.

FlowReasoner: Reinforcing Query-Level Meta-Agents

Summary: FlowReasoner is a query-level meta-agent that automates the creation of personalized multi-agent systems for each user query through deliberative reasoning. It builds basic reasoning ability via distillation from DeepSeek R1 and further improves using reinforcement learning with external execution feedback. A multi-purpose reward optimizes performance, complexity, and efficiency. Experiments show FlowReasoner outperforms o1-mini by 10.52% across three benchmarks.

Shape-Guided Clothing Warping for Virtual Try-On

Summary: SCW-VTON is a novel shape-guided virtual try-on method that improves clothing fit and realism by enforcing global shape constraints and enhancing limb textures. It uses a dual-path warping module, one for shape alignment and one for appearance flow guidance, along with a limb reconstruction network to correct distortions. SCW-VTON significantly enhances clothing-body consistency and detail control, outperforming existing state-of-the-art methods in both qualitative and quantitative evaluations.

EasyEdit2: An Easy-to-use Steering Framework for Editing Large Language Models

Summary: EasyEdit2 is a plug-and-play framework for controllably steering Large Language Models at test time across dimensions like safety, sentiment, and reasoning without altering model parameters. It introduces a steering vector generator and applier for seamless behavior adjustment, requiring only a single example for effective control. EasyEdit2 demonstrates strong empirical results across LLMs and is publicly available with code and demos.

Stop Summation: Min-Form Credit Assignment Is All Process Reward Model Needs for Reasoning

Summary: PURE (Process sUpervised Reinforcement lEarning) addresses reward hacking in Process Reward Models (PRMs) by introducing a min-form credit assignment, where value is based on the minimum of future rewards rather than the cumulative sum. This approach limits reward exploitation and stabilizes training. Experiments show PURE achieves strong reasoning performance with reduced steps and further improves with minimal verifiable reward supplementation.

TAPIP3D: Tracking Any Point in Persistent 3D Geometry

Summary: TAPIP3D is a novel method for long-term 3D point tracking that lifts 2D video features into a stabilized 3D world space by compensating for camera motion. It introduces a Local Pair Attention mechanism to manage irregular 3D point distributions and refine 3D trajectories. TAPIP3D outperforms existing trackers, improving both 3D and 2D tracking accuracy across benchmarks when depth information is available.